You've found the perfect data on a website. Now you're staring at hundreds of rows wondering: "There has to be a better way than manually copy-pasting each cell individually into Excel."

You're right. There is.

Whether it's competitor prices that update daily, property listings you need to track, or research data scattered across multiple pages, manually transcribing web data into Excel is soul-crushing work that nobody should have to do.

The good news? You have options. Lots of them.

In this guide, you'll discover five proven methods to automate this process from Excel's hidden built-in features to AI-powered tools that do the heavy lifting for you. We'll show you exactly when to use each method, what they cost, and which technical skills (if any) you'll need.

By the end of this guide, you'll never manually copy-paste web data again.

Method 1: Manual copy-paste from a webpage into excel

Let's start with the method you're probably already using (and if so need to stop doing). Manual copy-paste is exactly what it sounds like - selecting data from a website, hitting Ctrl+C, switching to Excel, and pasting with Ctrl+V. Over and over. And over.

When this actually makes sense:

- You don't need a whole lot of data (20 rows or fewer).

- It's truly a one-time thing and you have no ongoing need for this data.

- The data is perfectly formatted already in the structure you need.

While copying and pasting data from an individual web page, it's prone to error, doesn't scale, and the data is rarely structured the way you need it.

Method 2: Scraping website data using Excel's Web Query feature

Excel includes a native web scraping tool called "Get Data from Web" that can pull structured data directly from websites into your spreadsheet.

When to use Excel's native web scraping tool

- The data you want to scrape and the website is simple (table based data is best).

- You already have access to Excel, and are comfortable using it.

- You don't need any dynamic data.

- You only need data from a single webpage vs. an entire website.

How to scrape web data using Excel's web scraping tool

- Open Excel and click the Data tab.

- Select From Web in the Get & Transform section.

- Enter the target webpage URL.

- Choose from available tables Excel detects.

- Click Load to import the data.

- Set up automatic refresh schedules if needed.

Advantages:

- Built into Excel so there's no additional software required (if you already have access).

- User-friendly and easy to set up (point and click).

- You can schedule updates to automatically refresh the data.

Limitations:

- Limited website scraping capabiliites, ex: struggles with JavaScript-heavy or complex sites, can't handle login requirements, can't handle navigation, etc.

- Basic customization and configuration, ex: can't structure the data how you need it, can't differentiate between types of data, etc.

- Breaks when websites change structure.

- Not scalable beyond a single web page and can't capture page and sub-page data into a unified datasest.

Method 3: Scraping data from a website into Excel using Python

For those who are comfortable with coding, Python provides a robust and flexible solution. Libraries like BeautifulSoup and Selenium allow for a highly customized web scraping experience, making them ideal for people who want more control over their web scraping tasks.

How to Use Python Libraries

- Install Dependencies: Start by installing Python and the libraries you'll use. BeautifulSoup is good for basic scraping, whereas Selenium is better for interactive websites.

- Write Script: Develop a Python script tailored to your scraping needs, whether it’s navigating through multiple web pages, selecting specific data, or handling CAPTCHAs.

- Test: Run your script to ensure it captures the data accurately and debug as necessary.

- Export to Excel: Use Python's Pandas library to export the data into an Excel-compatible format like .csv or .xlsx.

Pros

- Highly Customizable: Python scripts are flexible and can be tailored to capture exactly what you need, even if it's deeply nested within the webpage.

- Dynamic Content: Python libraries can handle JavaScript-heavy or dynamically-loaded websites, going beyond the capabilities of simpler tools.

- Automation: Once your script is ready and debugged, you can automate it to run as frequently as you need, or even on a schedule.

Cons

- Technical Barrier: Not having the technical know-how to install libraries and write code can be a big hurdle when using Python.

- Time-Consuming: Developing and maintaining a Python script could be time-intensive, particularly for complex scraping tasks.

- Maintenance: Websites often update their layout or implement new features, which could break your script without regular maintenance.

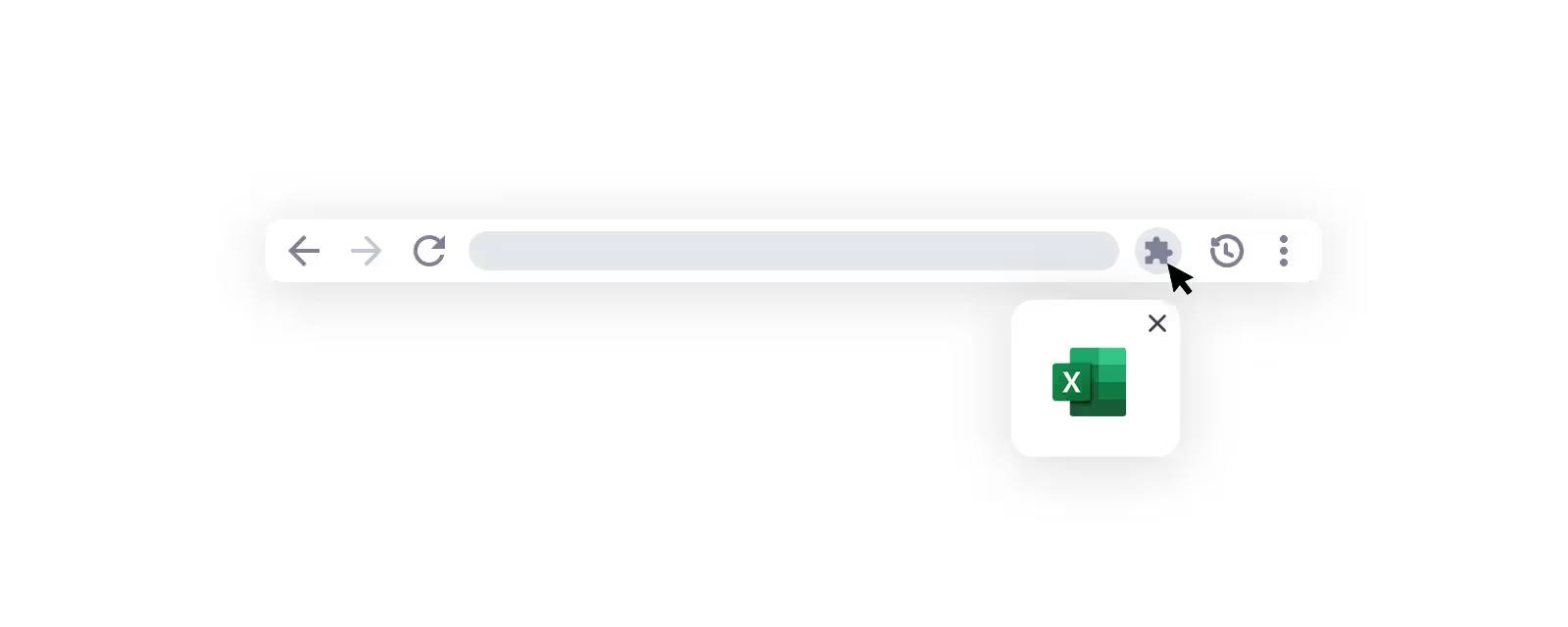

Method 4: Browser Extensions

Not a coder but still want more functionality than what Excel’s Web Query offers? Browser extensions might be the solution for you. These tools can be added to your web browser and allow you to scrape data from websites with just a few clicks. With user-friendly interfaces that let you specify what data to extract, you can scrape data directly into an Excel-compatible format.

How to Use Browser Extensions

- Add Extension: Install a web scraping extension compatible with your browser.

- Navigate to Website: Open the website from which you want to scrape data.

- Configure Settings: Use the extension’s interface to specify the data elements you want to extract, including any parameters.

- Run and Export: Once configured, run the extension to collect the required data, which you can export into an Excel-compatible format.

Pros

- Ease of Use: Browser extensions typically guide you through the data extraction process, making it easier for non-technical users.

- Speed: Extensions allow you to scrape data quickly, particularly when you're familiar with their features.

- No Additional Software: Browser extensions operate within your web browser, negating the need for other software or applications.

Cons

- Limited Capabilities: Some browser extensions can't handle complex website structures or dynamically loaded content as effectively as Python libraries.

- Browser-Specific: Extensions are usually meant for a specific browser, so you might face limitations if you switch to a different one.

- Maintenance and Updates: You'll need to keep both your browser and the extension updated to ensure they continue to work well together.

Method 5: Use Browse AI

What if there was a tool that combined the ease-of-use of browser extensions with the deep customization options of Python libraries? Enter Browse AI. This intuitive platform enables you to train a custom robot to extract or monitor data from any website and turn it into a spreadsheet within minutes, without writing a single line of code.

How to Use Browse AI

- Sign Up or Log In: First things first, head over to Browse AI's website to sign up for free or log in.

- Pick a Task: From the user-friendly dashboard, choose whether you want to extract data once or set up a periodic monitor. You can create a custom task or even use a prebuilt robot for popular websites.

- Train Your Robot: Show your robot how to navigate the website and identify the data you want to scrape.

- Run and Export: Run your robot to collect the data and download the CSV file (compatible with Excel) or use an integration like Zapier, Make, etc to export it to Excel automatically.

Pros

- User-Friendly: Browse AI’s intuitive interface is designed to be as simple as choosing what you want to scrape and letting the robot do the work.

- Customizable and Scalable: From small one-off tasks to large projects, Browse AI can be scaled and tailored to meet your needs.

- Automated Monitoring: Your robot can run and notify you at scheduled intervals, ensuring you always have the most up-to-date data.

Cons

- Budget: While Browse AI offers free trials and affordable plans, it's a paid service, which might be a factor depending on your budget.

- Learning Curve: Though we strive for ease of use, every tool has a learning curve. But with the help center and supportive team, you'll be up and running in no time.

So, there you have it—five different ways to scrape data from a website into Excel. Each approach comes with its own set of pros and cons, and the best method for you will depend on your actual needs, technical expertise, and the complexity of the task at hand. Whether you’re looking for a quick one-off data extraction or need to set up an intricate, ongoing data gathering operation, there's a solution that fits.

And if you're looking for an option that combines user-friendliness with high-level customization, Browse AI stands out as a one-stop solution. It adapts to various levels of expertise and project scopes, so you can easily turn any website into a spreadsheet in just a few minutes.

FAQs for scraping data from a website to excel